Imagine worlds beyond your wildest dreams, brought to life with just a few words. It’s no longer science fiction; it’s the revolutionary reality of Sora, the groundbreaking text-to-video model from OpenAI.

Remember the days when creating professional-looking videos seemed like a distant dream? Well, those days are gone. Sora has ripped open the doors to a world where anyone, regardless of technical expertise, can be a storyteller, an animator, or even a filmmaker.

How Sora Turns Your Words into Worlds

Ever wondered how Sora transforms mere text into vibrant, moving pictures? It’s like having a genie in a bottle, granting your visual wishes with just a few words. While the technical details might involve complex AI algorithms, the basic process can be understood without getting lost in jargon.

Imagine your text as a blueprint: It describes the characters, setting, actions, and overall flow of your desired video. Sora, the master architect, takes this blueprint and translates it into visual components, frame by frame.

Think of it like a game of “telephone” with AI:

- Understanding the blueprint: First, Sora uses natural language processing (NLP) to grasp the meaning and intent behind your text. It identifies key elements, like characters, objects, and actions.

- Building the scene: Then, it taps into its vast memory of images, videos, and even real-world data. It uses this knowledge to create a mental picture of your described scene, choosing appropriate visuals and layouts.

- Bringing it to life: Finally, it puts its artistic skills to work, generating each frame of the video using powerful machine learning models. These models are trained on massive datasets of images and videos, allowing them to create realistic visuals and movements.

Key technologies under the hood:

- Natural Language Processing (NLP): This helps Sora understand the “language” of your text, identifying key elements and intent.

- Machine Learning Models: These are the workhorses that generate the actual video frames, leveraging their training on vast amounts of visual data.

- Generative Adversarial Networks (GANs): These advanced models pit two AI systems against each other, one creating and one critiquing, leading to progressively better and more realistic outputs.

Research Techniques

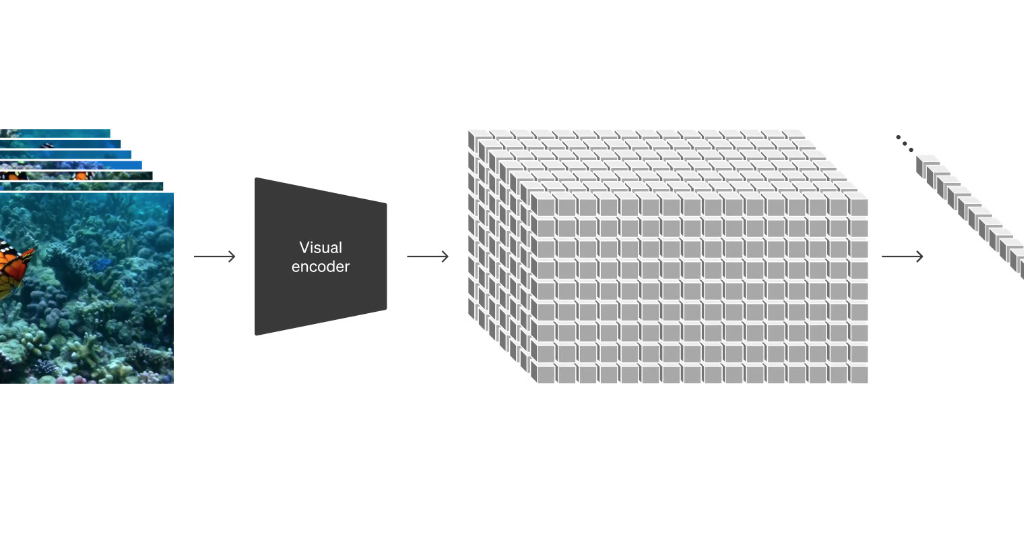

Sora utilizes a diffusion model to generate videos, initially resembling static noise, which gradually transforms by removing the noise across multiple steps. It can produce entire videos at once or extend existing ones, ensuring consistency even when a subject temporarily goes out of view. Employing a transformer architecture akin to GPT models, Sora achieves superior scaling performance.

Videos and images are represented as collections of patches, akin to tokens in GPT, allowing for training on diverse visual data encompassing various durations, resolutions, and aspect ratios. Sora integrates techniques from DALL·E and GPT models, employing recaptioning to generate descriptive captions for training data, enhancing fidelity to user instructions in generated videos. Additionally, Sora can animate still images and extend existing videos, serving as a foundational step towards models capable of simulating the real world, a crucial advancement towards achieving Artificial General Intelligence (AGI).

Sora not only create images by text prompts, yes you are reading this right, explore its potential here –

Sora operates not only on text prompts but also on various inputs like pre-existing images or videos. This versatility empowers Sora to tackle a wide array of image and video editing tasks, including creating perfectly looping videos, animating static images, and extending videos forwards or backwards in time.

Animating DALL·E Images:

Sora can generate videos based on images from DALL·E, a renowned image generation model. Below, we showcase example videos created using images from DALL·E 231 and DALL·E 330.

Extending Generated Videos:

Additionally, Sora can extend videos in either direction, effectively elongating their duration. Here, we present four videos that were backward extended from a segment of a generated video, each starting differently but leading to the same ending.

Video-to-Video Editing:

Utilizing diffusion models like SDEdit, Sora can modify the styles and environments of input videos seamlessly, even without explicit instructions. This technique, known as zero-shot editing, enhances Sora’s capabilities in video editing tasks.

Connecting Videos:

Moreover, Sora can smoothly interpolate between two distinct input videos, creating seamless transitions despite differences in subjects and scene compositions. The examples below illustrate gradual transitions between videos with entirely different content.

The Future Beckons: Where Does Sora Lead Us?

Despite its limitations, Sora represents a significant leap forward in text-to-video technology. Its potential applications are vast, from democratizing animation and revolutionizing educational content to enhancing marketing campaigns and personalizing storytelling experiences.

As Sora evolves, we can expect real-time generation, even more artistic styles, and greater control over specific details. This opens doors for interactive experiences and collaborative storytelling on a whole new level.

Conclusion: A New Chapter in Video Creation

Sora is not just a tool; it’s a catalyst for change. It challenges traditional video creation methods, empowers new voices, and ignites exciting possibilities for the future of storytelling. While ethical considerations remain crucial, the potential for positive impact is undeniable. As we embrace this new chapter, it’s essential to remember that the power of storytelling lies not just in the technology, but in the hands of those who wield it.

What are your thoughts on Sora’s potential? Share your ideas and concerns in the comments below!

Reference –

https://openai.com/research/video-generation-models-as-world-simulators